Cloud Resume Challenge Part 4 - Building the Backend Infrastructure for the Cloud Resume Challenge Website with AWS and Terraform

Table of contents

- Step 1: Setting Up Terraform and AWS Provider

- Step 2: Configuring IAM Roles and Policies

- Step 3: Packaging and Deploying the Lambda Function

- Step 4: Creating CloudWatch Log Group and API Gateway

- Step 5: Setting Up API Gateway Stage

- Step 6: Integrating API Gateway with Lambda Function

- Step 7: Defining API Gateway Route

- Step 8: Granting Lambda Permission for API Gateway

- Step 9: Creating DynamoDB Table

- Step 10: Deployment

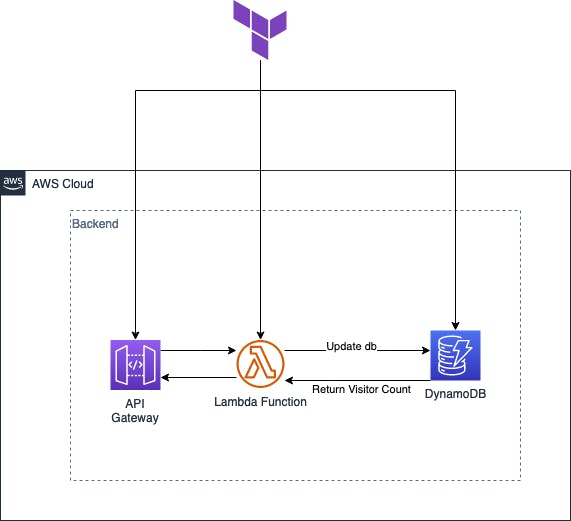

In this blog post, I will walk you through the process of building the backend infrastructure for your Cloud Resume Challenge website using AWS services and Terraform. By following the steps outlined below, you will be able to set up and manage the necessary resources with ease.

Step 1: Setting Up Terraform and AWS Provider

To get started, make sure you have Terraform installed and configured on your machine. Then, create a file named main.tf and copy the following Terraform configuration into it:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.0"

}

}

required_version = ">= 1.2.0"

}

provider "aws" {

region = "eu-west-2"

}

This configuration sets up the required provider and specifies the AWS region for your resources.

Step 2: Configuring IAM Roles and Policies

Next, let's define an IAM role and policy for the Lambda function that powers our website. Add the following code to your main.tf file:

resource "aws_iam_role" "lambda_role" {

name = "terraform_lambda_func_Role"

assume_role_policy = jsonencode(

{

"Version" : "2012-10-17",

"Statement" : [

{

"Action" : "sts:AssumeRole",

"Principal" : {

"Service" : "lambda.amazonaws.com"

},

"Effect" : "Allow",

"Sid" : ""

}

]

})

}

resource "aws_iam_policy" "iam_policy_for_lambda" {

name = "aws_iam_policy_for_terraform_lambda_func_role"

path = "/"

description = "AWS IAM Policy for managing aws lambda role"

policy = jsonencode(

{

"Version" : "2012-10-17",

"Statement" : [

{

"Action" : [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource" : "arn:aws:logs:*:*:*",

"Effect" : "Allow"

},

{

"Effect" : "Allow",

"Action" : [

"dynamodb:UpdateItem",

"dynamodb:GetItem",

"dynamodb:PutItem"

],

"Resource" : "your_dynamo_db_arn"

},

]

})

}

resource "aws_iam_role_policy_attachment" "attach_iam_policy_to_iam_role" {

role = aws_iam_role.lambda_role.name

policy_arn = aws_iam_policy.iam_policy_for_lambda.arn

}

This code defines an IAM role and policy for our Lambda function, granting it the necessary permissions to access CloudWatch Logs and DynamoDB.

Step 3: Packaging and Deploying the Lambda Function

In this step, we will package our Python code into a zip file and specify it as the deployment package for our Lambda function. Add the following code:

data "archive_file" "zip_the_python_code" {

type = "zip"

source_dir = "${path.module}/lambda/"

output_path = "${path.module}/lambda/lambda_function.zip"

}

resource "aws_lambda_function" "terraform_lambda_func" {

filename = "${path.module}/lambda/lambda_function.zip"

function_name = "terraform_lambda_func"

role = aws_iam_role.lambda_role.arn

handler = "lambda_function.lambda_handler"

runtime = "python3.8"

depends_on = [aws_iam_role_policy_attachment.attach_iam_policy_to_iam_role]

environment {

variables = {

databaseName = "visitor_count_ddb"

}

}

}

This code packages your Python code into a zip file and deploys it as a Lambda function with the specified name, role, handler, and runtime. It also sets an environment variable for the database name.

Step 4: Creating CloudWatch Log Group and API Gateway

Now, let's create a CloudWatch log group and an API Gateway for our backend. Add the following code to your main.tf file:

resource "aws_cloudwatch_log_group" "api_gw" {

name = "visitor_count_log_group"

retention_in_days = 30

}

resource "aws_apigatewayv2_api" "lambda" {

name = "visitor_count_CRC"

protocol_type = "HTTP"

description = "Visitor count for Cloud Resume Challenge"

cors_configuration {

allow_origins = ["https://estebanmoreno.link", "https://www.estebanmoreno.link"]

}

}

This code creates a CloudWatch log group to store logs for our API Gateway and defines an API Gateway API with a specific name, protocol type, description, and CORS configuration.

Step 5: Setting Up API Gateway Stage

Next, let's configure the API Gateway stage for our backend. Add the following code:

hcl

resource "aws_apigatewayv2_stage" "lambda" {

api_id = aws_apigatewayv2_api.lambda.id

name = "default"

auto_deploy = true

access_log_settings {

destination_arn = aws_cloudwatch_log_group.api_gw.arn

format = jsonencode({

requestId = "$context.requestId"

sourceIp = "$context.identity.sourceIp"

requestTime = "$context.requestTime"

protocol = "$context.protocol"

httpMethod = "$context.httpMethod"

resourcePath = "$context.resourcePath"

routeKey = "$context.routeKey"

status = "$context.status"

responseLength = "$context.responseLength"

integrationErrorMessage = "$context.integrationErrorMessage"

})

}

tags = {

Name = "Cloud Resume Challenge"

}

}

This code sets up the default stage for our API Gateway API, enabling auto-deployment and configuring access log settings.

Step 6: Integrating API Gateway with Lambda Function

Now, let's integrate our API Gateway with the Lambda function we created earlier. Add the following code:

hcl

resource "aws_apigatewayv2_integration" "terraform_lambda_func" {

api_id = aws_apigatewayv2_api.lambda.id

integration_uri = aws_lambda_function.terraform_lambda_func.invoke_arn

integration_type = "AWS_PROXY"

integration_method = "POST"

}

This code establishes the integration between our API Gateway API and the Lambda function, specifying the integration type and method.

Step 7: Defining API Gateway Route

Next, let's define the route for our API Gateway API. Add the following code:

resource "aws_apigatewayv2_route" "terraform_lambda_func" {

api_id = aws_apigatewayv2_api.lambda.id

route_key = "ANY /terraform_lambda_func"

target = "integrations/${aws_apigatewayv2_integration.terraform_lambda_func.id}"

}

This code sets up the route for our API Gateway API, mapping it to the integration we defined in the previous step.

Step 8: Granting Lambda Permission for API Gateway

To allow API Gateway to invoke our Lambda function, we need to grant the necessary permissions. Add the following code:

resource "aws_lambda_permission" "api_gw" {

statement_id = "AllowExecutionFromAPIGateway"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.terraform_lambda_func.function_name

principal = "apigateway.amazonaws.com"

source_arn = "${aws_apigatewayv2_api.lambda.execution_arn}/*/*"

}

This code grants the necessary execution permission for API Gateway to invoke our Lambda function.

Step 9: Creating DynamoDB Table

Finally, let's create a DynamoDB table to store visitor count data. Add the following code:

resource "aws_dynamodb_table" "visitor_count_ddb" {

name = "visitor_count_ddb"

billing_mode = "PROVISIONED"

read_capacity = 20

write_capacity = 20

hash_key = "id"

attribute {

name = "id"

type = "S"

}

attribute {

name = "visitor_count"

type = "N"

}

global_secondary_index {

name = "visitor_count_index"

hash_key = "visitor_count"

projection_type = "ALL"

read_capacity = 10

write_capacity = 10

}

tags = {

Name = "Cloud Resume Challenge"

}

}

resource "aws_dynamodb_table_item" "visitor_count_ddb" {

table_name = aws_dynamodb_table.visitor_count_ddb.name

hash_key = aws_dynamodb_table.visitor_count_ddb.hash_key

item = <<ITEM

{

"id": {"S": "Visits"},

"visitor_count": {"N": "1"}

}

ITEM

lifecycle {

ignore_changes = all

}

}

This code creates a DynamoDB table with the specified attributes, including a global secondary index, and assigns tags for identification. Additionally, it adds an initial item to the table for visitor count tracking.

Step 10: Deployment

To ensure the successful deployment of our Terraform backend infrastructure, we need to include a deployment step in our process. This step will involve executing the terraform init, terraform plan, and terraform apply commands. Let's walk through each of these steps:

Terraform Init: The

terraform initcommand initializes the Terraform working directory. It downloads and installs the necessary provider plugins and sets up the backend configuration. This step ensures that Terraform has all the required dependencies to create and manage the infrastructure.Terraform Plan: The

terraform plancommand generates an execution plan for Terraform. It analyzes the current state of the infrastructure and compares it with the desired state defined in our Terraform configuration. The plan highlights the actions that Terraform will take to achieve the desired state, such as creating, modifying, or destroying resources. It helps us validate our configuration and understand the impact of the changes before applying them.Terraform Apply: The

terraform applycommand applies the changes defined in the Terraform configuration to the target environment. It creates, modifies, or destroys resources as needed to align the infrastructure with the desired state. During this step, Terraform will prompt for confirmation before making any changes, allowing you to review and approve the proposed changes.

By incorporating these deployment steps into our workflow, we can ensure that our Terraform backend infrastructure is properly initialized, validated, and deployed. This helps us automate the deployment process, making it more efficient, reliable, and consistent.

The output you see below indicates that the Terraform infrastructure has been successfully created, and there are no further changes required based on the current state of your configuration. This is an expected outcome and signifies that your infrastructure is in sync with the desired state defined in your Terraform files.

No changes. Your infrastructure matches the configuration.

Terraform has compared your real infrastructure against your configuration and found no differences, so no changes are needed.

Apply complete! Resources: 0 added, 0 changed, 0 destroyed.

With these steps completed, you have successfully built the backend infrastructure for your Cloud Resume Challenge website. In the upcoming article, I will guide you through the implementation of a GitHub Actions workflow to automate the deployment of this Terraform backend infrastructure.